| Type | Digital electronic device |

| Transition | Late 1900s to smaller, more affordable consumer designs |

| Capabilities | Perform complex mathematical calculations • Information processing |

| First invented | 1830s |

| Fundamental Role | Cryptography • Aeronautics • Nuclear physics |

| Current Prevalence | Ubiquitous in homes and businesses worldwide |

| Early Accessibility | Expensive • Room-sized • Governments • Universities • Large corporations |

| Original Applications | Scientific • Military |

The computer is a digital electronic device capable of performing complex mathematical calculations, information processing, and data storage. First conceptualized and prototyped in the 1830s, the computer has evolved from cumbersome, room-sized machines accessible only to governments and universities into the ubiquitous personal devices that pervade modern life.

The foundations of the modern computer were laid in the 1830s by Charles Babbage, an English mathematician who designed but did not fully construct the first general-purpose "analytical engine." Babbage's pioneering work laid out key principles of digital computing, including the use of punched cards for programming and the separation of memory storage and computational logic.

Over the next several decades, other inventors and mathematicians like George Boole, Ada Lovelace, and Alan Turing built on Babbage's ideas, developing the theoretical underpinnings of computer programming and Boolean logic. The first functional, if limited, computers started appearing in the 1860s, using mechanical gears and switches to perform basic arithmetic.

It wasn't until the early 20th century that computing technology became advanced and reliable enough for practical use. The first widespread applications were in the fields of mathematics, science, and engineering, where the computer's ability to rapidly perform complex calculations proved invaluable.

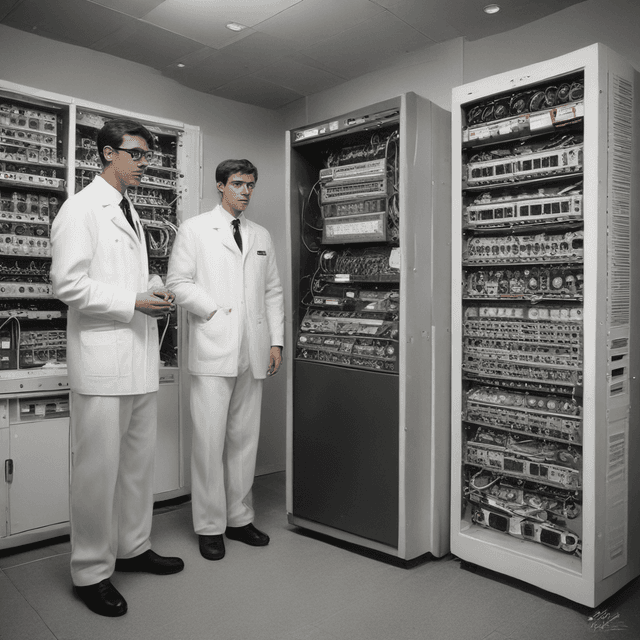

Pioneering computer designs like the ENIAC and Z1 were enormous, room-sized machines that consumed massive amounts of electricity and required teams of operators. They were primarily used by government agencies, military research labs, and elite universities for tasks like cryptography, ballistics, and aerodynamics modeling. Access to these powerful but scarce resources was highly restricted.

Throughout the mid-20th century, the computer played an indispensable role in enabling major technological breakthroughs, especially in the areas of military and aerospace development. Computers were essential to the success of projects like the Manhattan Project, the space race, and various weapons development programs.

The need for ever-more powerful and specialized computing power drove rapid innovation in areas like vacuum tubes, transistors, integrated circuits, and computer programming. Early programming languages like FORTRAN and COBOL emerged to make these machines more usable.

However, computers remained prohibitively expensive and inaccessible to the general public. They were the exclusive domain of large organizations, research institutions, and the military-industrial complex.

It was not until the late 1900s that computing technology reached a level of miniaturization, cost reduction, and user-friendliness to begin transitioning into the consumer market. The development of microprocessors, personal computers, and GUI-based operating systems in the 1970s and 80s opened up computing to businesses and households worldwide.

Today, computers in the form of desktop, laptop, tablet, and smartphone devices have become indispensable tools for work, education, entertainment, and social connection. Their impact on nearly every aspect of modern life is difficult to overstate. From the inner workings of the global economy to the way we communicate and access information, the computer has revolutionized human civilization in ways that were scarcely imaginable when it was first conceived nearly two centuries ago.